[ comments ]

Photo Album(constantly updated)Table of Contents

- A LARGE warning!

- PalmOS Architecture (and a bit of history)

- Towards the first unauthorized PalmOS port

- Towards the first pirate PalmOS device

- We need hardware, but developing on hardware is ... hard

- Um, but now we need a kernel...

- So, uh, what about all that pesky ARM code?

- You do not mean...? (pt2)

- A JIT's job is never over

- Is PACE fast enough?

- But, you promised hardware...

- Tales of more PalmOS reverse engineering

- Real hardware: reSpring

- So where does this leave us?

- Article update history

- Comments...

A LARGE warning!

This is a pre-release article about a pre-release project. I will update both so this is not a static document. Keeping track of changes is your job, if you so choose, all I can promise you is that I'll keep a changelist on the bottom of the article

PalmOS Architecture (and a bit of history)

History

PalmOS before 5.4 kept all data in RAM in databases. They came in two types: record databases (what you'd imagine it to be) and resource databases (similar to MacOS classic resources). Each database had a type and a creator ID, each a 32-bit integer, customarily with each 8-bit piece being an ascii char. Most commonly any application would create databases with their creator ID set to its. Certain types also had meaning, like for example appl was an appliction and panl was a preference panel.

PalmOS started out on Motorola 68k processors and ran on them from first development all the way to version 4.x. For version 5, Palm Inc chose to switch to ARM processors, as they allowed a lot more speed (which is always a plus). But what to do about all the software? Lots of PalmOS apps were written for OS 4.x and compiled for m68k processor. Palm Inc introduced PACE - Palm Application Compatibility Extension. PACE intercepted the OsCall SysAppLaunch (and a number of others) and emulated m68k processor, allowing all the old software to run. When m68k apps called an OsCall, PACE would translate the parameters and call the ARM Native OsCall. This meant that while the app's logic was running in emulation, all OsCalls were native ARM and fast. Combine this with the fact that PalmOS 4.x devices usually ran at 33MHz, and PalmOS 5.x devices usually ran at hundreds, there was almost no slowdown, most old apps compiled for PalmOS 4.x ran at a perfectly good speed. It was even good enough for Palm Inc, since most built-in apps (like calendar and contacts were still m68k apps, not ARM). There was also PalmOS 6.x (Cobalt) but it never really saw the light of day and is beyond the scope of this document.

Palm Inc never documented how to write full Native ARM applications on PalmOS 5.x. It as possible, but not documented. The best official way to get the full speed of the new ARM processors was to use the OsCall PceNativeCall to jump into a small bit of native ARM code that Palm Inc called "ARMlet"s and later "PNOlet"s. Palm said that only the hottest pieces of code should be treated this way, and it was rather hard to call OsCalls from these bits of native ARM code (you had to call back into PACE, which would marshal the parameters for the native API, and then call it. The ways to call the real Native OsCalls were also not documented.

PalmOS 5.x kept a lot of the design of PalmOS 4.x, including the shared heap, lack of protected memory, and lack of proper documented multithreading. A new thing was that PalmOS 5.x supported loadable modules. In fact, every Native ARM application or library in PalmOS 5.x is a module. Each module has a module ID, which is required to be system-unique and exist in the range of 0..1023. This is probably why Palm Inc never documented how to produce full Native applications - they could never allow more than 1024 of them to exist.

PalmOS licensees (sony, handspring, etc) got the sources to the OS and all of this knowledge of course. They were able to customize the OS as needed and then shipped it, but the architecture was always mostly the same. This also aids us a lot.

Modules? Libraries? DALs? Drivers?

The kernel of the OS, memory management, most of the drivers, and low level CPU wrangling is done by the DAL. DAL(Module ID 0) exports about 200 OsCalls, give or take based on the PalmOS version. These are low level things like getting battery state, raw access to screen drawing primitives, module loading and unloading, memory map management, interrupt management, etc. Basically these are functions that no user-facing app would ever need to use. On top of the DAL lives Boot. Boot(Module ID 1) provides a lot of the lower-level user-facing OsCalls. Implemented here are things like the DataManager, MemoryManager, AlarmManager, ExchangeManager, BitmapManager, and WindowManager. Feel free to refer to the PalmOS SDK for details on all of those. On top of Boot lives UI. UI(Module ID 2) provides all of the UI primites to the user. These are things like controls (buttons, sliders, etc), forms, menus, tables, and so on. These three modules together make up the core of PalmOS. You could, in fact, almost boot a ROM containing just these three files.

These first three modules are actually somewhat special, being the core of the OS. They are always loaded, and their exported functions are always accessible via a special shortcut. For modules 0, 1, and 2, you can call an exported function number N by executing these two instructions: LDR R12, [R9, #-4 * (module_ID + 1)]; LDR PC, [R12, #4 * func_no]. This shortcut exists for easy calls to OsCalls by native modules and only works because these modules are always loaded. This is not a general rule, and this will NOT work for any other modules. You might ask if one can also write to these tables of function pointers to replace them. Yes, yes you can and this was often done by what were called "hacks" and also is liberally used by the OS itself (but not via direct writes but via an OsCall: SysPatchEntry).

PalmOS lacks any memory protection, any user code can access hardware. PalmOS actually uses this - things like SD card drivers, and drivers for other peripherals are usually separate modules and not part of the DAL. The Boot module will load all PalmOS resource databases of certain types at boot, allowing them to initialize. An incomplete list of these types is: libs(slot driver), libf(filesystem driver), vdrv(serial port driver), aext(system extension), aexo(OEM extension). These things being separate is actually very convenient, since that means that they can be easily removed/replaced. There are of course corner cases, since PalmOS developers never anticipated this. For example, if NO serial drivers are loaded, the OS will crash as it never expected this. Luckily, this is also easy to work around.

Anytime a module is loaded, the entry point is called with a special code, and the module is free to initialize, set up hardware, etc. When it is unloaded, it gets another code, and can deinitialize. There is another special code modules can get and that is from PACE. If you remember, I said that PACE marshals parameters from m68k apps to OsCalls and back, but PACE cannot possibly know about parameters that a random native library takes, so the marshalling there must be done by the library itself. This special code is used to tell the library to: read parameters from the m68k emulated stack, process them, and put the result unto the emulated m68k registers (PACE exports functions to actually manage the emulated state, so the libraries do not need to know of its insides).

Towards the first unauthorized PalmOS port

So what's so hard?

As I mentioned, none of the native API of PalmOS 5.x was ever documented. There was a small number of people who figured out some parts of it, but nobody really got it all, or even close to it. To start with, because large parts are not useful to an app developer, and thus attracted no interest. This is a problem, however, if one wants to make a new device. So I had to actually do a lot of reverse engineering for this project - a lot of boring reverse engineering of very boring APIs that I still had to implement. Oh, and I needed a kernel, and actual hardware to run on.

To start with, I wrote a tool to split apart and put back together working PalmOS ROM images. The format is rather convoluted, and changed between versions, but after a lot of work the "splitrom" tool can now successfully split a PalmOS ROM from pre-release pre-v.1.0 PalmOS devices all the way to the PalmOS 6.0 cobalt ROMs. The "mkrom" tool can now produce valid PalmOS 5.x images - I never bothered to actually make it produce other versions as I did not need it. At this point I took a detour from the project to collect PalmOS ROMs. I now have one from almost every device and prototype. I'll share them with the world later. I tested this by pulling apart a T|T3 ROM, replacing some files, putting it back together, and reflashing my T|T3. It booted! Cool!

So write a DAL and you're done!

I had no hardware to test on, no kernel to use, and a lot more "maybe"s than I was willing to live with, so it was time for action. The quickest way I could think of to try it was to use a real ARM processor and an existing kernel - linux. Since my desktop uses an x86 processor and not ARM, qemu was used. I wrote a basic rudimentary DAL that simply logged any function called and then crashed on purpose. At boot, it did same as PalmOS's DAL does: load Boot and in a new thread call PalmOSMain OsCall. I then wrote a simple "runner" app that used mmap() to map an area of memory at a particular location backed by "rom.bin" and another by "ram.bin" and tried to boot it. I got some logged messages and a crash, as expected. Cool! I guess the concept could work. So, what is the minimum number of functions my DAL needs to boot? Turns out that most of them! Sad day...

Minimal DAL

It took months, but I got most of the DAL implemented, and it ran inside my "runner" inside qemu. It was a very scary setup. Since it was all a userspace app under Linux, I had to call back out to the "runner" to request things like thread creation, etc. It was a mess. Current rePalm code still supports this mode, but I do not expect to use it much, for a variety of reasons. To start with, Linux kernel lacks some API that PalmOS simply needs, for example ability to disable and re-enable task switching. Yup... PalmOS sometimes asks for preemption to be disabled. Linux lacks that ability. PalmOS also needs ability to remotely pause and resume a thread, without the thread's consent. The pthreads library lacks such ability as well. I hacked together some hacks using ptrace, but it was a mess. Fun story: since my machine is multi-core, and I never set any affinities, this was the first time ever that PalmOS ran on a multi-core device. I did not realize it till much later, but that is kind of cool, no?

Drawing is hard

There was one problem. For some reason, things like drawing line, rectangles, circles, and bitmaps were all part of the DAL. Now, it is not hard to draw a line, but things like "draw a rounded rectangle with foreground color of X and a background color of Y, using drawing mode 'mask' on this canvas" or "draw this compresed 16-bit full-color 144ppi image on this 4-bits-per-pixel 108ppi canvas with dithering, respecting transparency colors, and using 'invert' mode" or even "print string 'Preferences' with background color X, foreground Y, text color Z, dotted-underlined, using this low-density font on this 1.5 density canvas" get convoluted quickly. And yes, the DAL is expected to handle this all. Oh, and none of this was ever documented of course! This was a nightmare. At first I treated all drawing functions as NOPs and just logged the drawn text to know how far my boot has gotten. This allowed me to implement many of the other OsCalls that DAL must provide, but eventually I had to face having to draw. My first approach was to just implement things myself, based on function names and some reverse engineering. This approach failed quickly - the matrix of possibilities was simply too large. There are 8 drawing modes, 3 supported densities, 4 image compression formats, 5 supported color depths, and two font formats. It was not possible to think of everything, especially with no way to be sure I had it right. I am not sure if some of these modes ever got exercised by any software in existence at all, but it did not matter - it had to be pixel exact! What to do?

Theft is a form of flattery, right?

I decided on a stopgap measure. I disassembled the Zire72 DAL. And I copied each of the necessary functions, and all the functions they called, and all of the functions those functions called, and so on. I then cleaned up their direct references to Zire DAL's globals, and to each other, and I stuck it all into a giant "drawing.S" file. It was over 30,000 lines long, and I mostly had no idea how it worked. Or if it worked...

It did! Not right away, of course, but it did. Colors were messed up, artifacts everywhere, but I saw the touchscreen calibration screen after boot! Success, yes? Well, not even remotely. To start with, it turns out that in the interest of optimization, PalmOS's drawing code happily sticks its fingers into the display driver's globals. My display "driver" at this point was just an area of memory backed by an SDL surface. It took a lot of work (throwaway work - the worst kind) to figure out what it was looking for and give it to it. But after a few more weeks, Zire72's DAL's drawing code happily ran under rePalm and I was able to see things drawn correctly. After hooking up rudimentary fake touchscreen support, I was even able to interact with the virtual device and see the home screen. Great, but this was all a waste. I do not own that code and cannot ship it. I also cannot improve it, expand it, fix it, or even claim to entirely understand it. This was not a path forward.

Meticulously-performed imitation is also a form of flattery, no?

The time had come. I rewrote the drawing code. Function by function. Line by line. Assembly statement by assembly statement. I tested it after replacing every function as best as I could. Along the way I gained the understanding of how PalmOS draws, what shortcuts for what common cases there are, etc. This effort took two months, after them, 30,000 lines of uncommented assembly turned into 8,000 lines of C. rePalm finally was once again purely my own code! Along the way I optimized a few things and added support for one-and-a-half density, something that the Zire72 DAL never supported. Of all the parts of this project, this was the hardest to slog through, because at the end of every function decoded, understood, and rewritten, there was no noticeable movement forward - the goal was just to not break anything, and there were always dozens of thousands of lines of code to disasemble, understand, and rewrite in C.

Virtual SD card

For testing it would be convenient to be able to load programs easier into the device than baking them into the ROM. I wrote a custom slot driver that did nothing, but only allowed you to use my custom filesystem. That filesystem used hypercalls to reach code in the "runner" to perform filesystem ops on the host. Basically this created a shared folder between my PC and rePalm. I used this to verify that most software and games worked as expected

Which device ROM are you using?

ANY! I tested pre-production Tungsten T image, I tested LifeDrive image, even Sony TH55 ROM boots! Yes, there were custom per-device and per-OS-version tweaks, but I was able to get them to apply automatically at runtime. For example, determining which OS version is running is easily done by examining the number of exported entrypoints of Boot. And determining if the ROM is a Sony device is easy by looking for SonyDAL module. We then refuse to load it, and fake-export equivalent functions ourselves. Why does the DAL need to know the OS version? Some DAL entrypoints changed between PalmOS 5.0 and PalmOS 5.2, and PalmOS 5.4 or later expect a few extra behaviours out of existing funcs that we need to support.

So you're done, right? It works?

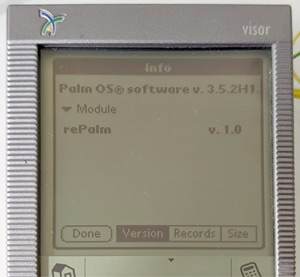

At this point, rePalm sort of worked. It was a window on my desktop that ran REAL UNMODIFIED PalmOS with only a single file in the ROM replaced - the DAL. Time to call it done, and pick a new project, right? Well, not quite. Like I said, Linux was not an ideal kernel for this, and making a slightly-more-open PalmOS simulator was not my goal. I wanted to make a device...

Towards the first pirate PalmOS device

A little bit about normal PalmOS 5.x devices, their CPUs, and the progress since...

In order to understand the difficulties I faced, it is necessary to explain some more about how PalmOS 5.x devices usually worked. PalmOS 5.x targetted ARMv4T or ARMv5 CPUs. They had 4-32MB of flash or ROM to contain the ROM, and 8-128MB or RAM for runtime allocations and data storage. PalmOS 5.4 added NVFS, which I shall for now pretend does not exist (as we all wished we could when NVFS first came out). ARMv4T and ARMv5 CPUs implement two separate instruction sets: ARM and Thumb. ARM instructions are each exactly 4 bytes, and are the original instruction set for ARM CPUs. Thumb was added in v4T as a method of improving code density. It is a set of 2-byte long instructions that implement the most common operations the code might want to do, and by being half the size improve code density. Obviously, you do not get something for nothing. In the CPUs back then, Thumb instructions had one extra pipeline stage, so this caused them to be slower in code with a lot of jumps. Also, as the instructions themselves were simpler, sometimes it took more of them to do the same thing. Thumb instructions, in most cases, also only have access to half as many registers as ARM instructions, further leading to slightly less optimal code. But, in general Thumb code was smaller, and speed was not a factor, so large parts of PalmOS were compiled in Thumb mode. (Sony bucks this trend, having splurged for larger flash chips and compiling the entire OS in ARM mode). Some things could also not at all be done in Thumb, for example, 32x32->64 bit multiply, and some were very suboptimal to do in Thumb (like a lot of the drawing code with a lot of complex bit shifts and addressing). These speed-critical pieces were always compiled in ARM mode in PalmOS. Also all library entry points were always in ARM mode with no other options, so even libraries entirely compiled as Thumb, had small thunks from ARM to Thumb mode on each entrypoint.

How does one actually switch modes between ARM and Thumb in ARMv5? Certain, but not all, instructions that change control flow perform the change. Since all ARM instructions are 4-bytes long and always aligned on a 4-byte boundary, any valid ARM instruction's address has the low two bits cleared. Thumb instructions are 2 bytes long, and thus have the bottom one bit cleared. 32-bit-long Thumb2 instructions are also aligned on a 2-byte boundary. This means that for any instruction in any mode, the lower bit of its address is always clear. ARM used this fact for mode switching. The BX instruction would now look at the bottom bit of the register you're jumping to, and if it was 1, treat the destination as Thumb, else as ARM. Any instruction that loads PC with a word will do the same: POP, LDM, LDR instructions. Arithmetic done on PC in Thumb mode does not change to ARM mode ever (low bit ignored) and arithmetic done on PC in ARM mode is undefined if the lower 2 bits produced are nonzero (CAUTION: this is one of the things that ARMv7 changed: this now has defined behaviour). Also an extra instruction was added for easy calls between modes: BLX. There is a form of it that takes a relative offset encoded in the instruction itself, which basically acts like a BL, but also switches modes to whatever NOT the current mode is. There is also a register mode of it that combines what a BX does with saving the return address. Of course to make sure that returns to Thumb mode work as expected, Thumb instructions that save a return address, namely BL and BLX set the lower bit of LR.

ARMv5 at this point in time is ancient history. ARM architecture is up to v8.x by now, with 64-bit-wide-registers and a completely different instruction set. ARMv7 is still often seen around (v8 can also run in v7 mode) and is actually an almost perfect (but actually not entirely so) superset of ARMv5. So I could basically take a dev board for any ARMv7 chip, which are abundant and cheap, and use that as my base, right? Technically yes, but I did not go this way. To start with, few of these CPUs are documented well, so unless you use linux kernel, you'll never get them up - writing your own kernel and drivers for them is not feasible (I am looking at you, allwinner). "But," you might object, "what about Raspberry Pi, isn't its CPU fully documented?" I considered it, but discarded the idea - RasPi is terribly unstable, and I had no desire to build on such a shaky platform. Launch firefox on your RasPi, open dailymail or some other complex site, and go away, come back in 2 weeks, I guarantee you'll be greeted by a hung screen and a kernel panic on the serial console. If even Linux kernel developers cannot make this thing work stably, I had no desire to try. No thanks. So what then?

ARMv7M

The other option was to use a microcontroller - they are plentiful, documented, cheap, and available. ARM designs and sells a large number of small cores under the Cortex brand. Cortex-M0/M0+/M1 are cores based on the ARMv6M spec - basically they run the same Thumb instruction set that ARMv5 CPUs did, with a few extra instructions to allow them to manage privileged state (MRS/MSR/CPS). Cortex-M23 is their successor, which adds a few extra instructions (DIV/CBZ/CBNZ/MOVW/MOVT/B.W) which makes it a bit less of a pain in the ass, but it still is very much a pain for complex work. Cortex-M3/M4/M7 implement ARMv7M spec, which has a very expanded Thumb2 instruction set. It is the same instruction set that ARM introduced into the ARM cores back in the day with ARMv6T2 architecture CPUs. These instructions are a mix of 2 and 4-byte long pieces and are actually pretty good for complex code, supporting long multiplies, complex control flow, and bitfield operations. They can also address all registers and not just half of them like the Thumb instruction set of yore. Cortex-M33 is the successor to these, adding a few more things we do not currently care about. Optionally, these cores can also include an FPU for hardware floating point support. We also do not care about that. There is only one problem: None of these CPUs support ARM instuctions. They all only run Thumb/Thumb2. This means we can run most of PalmOS's Boot and UI, but many other things will fail. Not acceptable. Well, actually, since every library has to be entered in ARM mode, nothing will run...

My kingdom for an ARM!

It is at this point that I decided to extend PalmOS's module format to support direct entry into Thumb mode and converted my DAL to this now format. I also taught my module loader to understand when an library's entry point points to a simple ARM-to-Thumb thunk, and to resolve this directly. This allowed an almost complete boot without needing ARM. But this was not a solution. Large parts of the OS were still in ARM mode (things like MemMove, MemCmp, division routines), and if the goal was to run an unmodified OS and apps, editing everything everywhere was not an option. Some things we could just patch via SysPatchEntry. This I did to the abovementioned MemMove and MemCmp for speed, providing optimal Thumb2 implementations. Other things I could do nothing about - things like integer division (which ARMv5 has no instruction for) were scattered in almost every library, and could not be patched away as they were not exported. We really did need something that ran ARM instructions.

But what if we try?

What exactly will happen if we try to switch an ARMv7M microcontroller into ARM mode? The manual luckily is very clear on that. It WILL switch, clear the status bit that indicated we're in Thumb mode, and then when it tries to execute the next instruction, it will take a UsageFault since it cannot execute in this mode. The Thumb BLX instruction of the form that always switches modes is undefined in ARMv7M, and if executed, the CPU will take a UsageFault as well, indicating in invalid instruction. This all sounds grim, but this is actually fantastic news! We can catch a UsageFault... If you see where I am going with this, and are appropriately horrified, thanks for paying attention! We'll come back to this story arc later, to give everyone a chance to catch up.

We need hardware, but developing on hardware is ... hard

CortexEmu to the rescue

I thought I could make this all work on a Cortex-M class chip, but I did not want to develop on one - too slow and painful. I also did not find any good emulators for Cortex-M class chips. At this point, I took a two-week-long break from this project to write CortexEmu. It is a fully functional Cortex-M0/M3/M23 emulator that faithfully emulates real Cortex hardware. It has a GDB stub so I can attach GDB to it to debug the running code, It has rudimentary hardware emulated to show a screen, and support an RTC, a console, and a touchscreen. It supports privileged and unprivileged mode, and emulates the memory protection unit (MPU) as well. CortexEmu remains the best way to develop rePalm.

Waaaah! You promised real hardware

Yes, yes, we'll get to that, and a lot more later, but that is still months later in the story, so be patient!

Um, but now we need a kernel...

Need a kernel? Why not Linux?

PalmOS needs a kernel with a particular set of primitives. We already discussed some (but definitely not all) reasons why Linux is a terrible choice. Add to that the fact that Cortex-M3 compatible linux is slow AND huge, it was simply not an option. So, what is?

I ended up writing my own kernel. It is simple, and works well. It will run on any Cortex-M class CPU, supports multithreading with priorities, precise timers, mutexes, semaphores, event groups, mailboxes, and all the primitives PalmOS wants like ability to force-pause threads, and ability to disable task switching. It also takes advantage of the MPU to add some basic safety like stack guards. Also, there is great (& fast) support for thread local storage, which comes in handy later. Why write my own kernel, aren't there enough out there? None of the ones out there really had the primitives I needed and bolting them on would take just as long.

So, uh, what about all that pesky ARM code?

The ARM code still was a problem

PalmOS still would not boot all the way to UI because of the ARM code. But, if you remember, as few paragraphs ago I pointed out that we can trap attempts to get into ARM mode. I wrote a UsageFault handler that did that, and then...I emulated it

You do not mean...?

Oh, but I do. I wrote an ARM emulator that would read each instruction and execute it, until the code exited ARM mode, at which point I'd exit the emulation and resume native execution. The actual details of how this works are interesting since the emulator needs its own stack and cannot run on the stack of the emulated code. There also needs to be a place to stash the emulated registers since we cannot just keep them in the real registers (not enough registers for both). Exiting emulation is also kind of fun since you need to load ALL register and status register as well all at once atomically. Not actually trivial on Cortex-M. Well, in any case, "emu.c" and "emuC.c" have the code - go wild and explore.

But isn't writing an emulator in C kind of slow?

You have no idea! The emulator was slow. I instrumented CortexEmu to count cycles, and came up with an average of 170 cycles of host CPU to emulate a single ARM instruction. Not good enough. Not even remotely. It is well known that emulators written in C are slow. C compilers kind of suck at optimizing emulator code. So what next? Well, I went ahead and rewrote the emulator core in assembly. Actually I did it twice. Once for ARMv7M (Cortex-M3 target) and once for ARMv6M (Cortex-M0 target). The speed improved a lot. Now for the M3 core I was averaging 14 cycles per cycle, and for the M0 it was 19. A very respectable emulator performance if I do say so myself.

So, is it fast enough now?

As mentioned before, on original PalmOS devices, ARM code was generally faster than Thumb, so most of the hottest, tightest, fastest code was written in ARM. For us, ARM is 14x slower than Thumb. So the code that was meant to be fastest is slow. But let us take an inventory of this code and see what it really is. Division routines are part of it. ARMv7M implements division in hardware, but ARMv5 did not (nor does ARMv6M). These routines are a hundred cycles or so in ARM mode. MemMove, MemMSet and MemCmp We spoke about already, and we do not care because we replaced them, but lots of libraries had their own internal copies we cannot replace. My guess is that the compiler prefers to inline its own "memset" and "memcpy" in most cases. That made up a large part of the boot process's ARM code usage. Luckily, all of these functions are the same everywhere...

So, can we pattern-match some of these in the emulator code and execute faster native routines? I did this and boot process did go faster. The average per-instr overhead rose due to matching, but boot time shrank. Cool. But what happens after boot? After boot we meet the real monster... PACE's m68k emulator is written in ARM. 60 kilobytes of what is clearly hand-written assembly with lots of clever tricks. Clever tricks suck when you're stuck emulating them... So this means that every single m68k application (which is most of them) is now running under double emulation. Gross... Oh, also: slow. Something had to be done. I considered rewriting PACE, but that is a poor solution - there are a lot of ARM libraries and I cannot rewrite them all. Plus, in what way can I claim to be running an unmodified OS if I replace every bit of it?

There is one more way to make non-native code fast...

You do not mean...? (pt2)

Just in time: this

PACE contains a lot of hot code that is static. On real devices it lives in ROM and does not change. Most libraries are the same. So, what can we do to make it run faster? Translate it to what we can run natively, of course. Most people would not take on a task of writing a just-in-time translator alone. But that is just because they are wimps :) (Or maybe they reasonably assume that it is a huge time sink with more corner cases than one could shake a stick at)

JITs: how do we start?

Basically the same way we did for the emulator. We create a per-thread translation cache (TC) which will hold our translations. Why per thread? Because this avoids the problem of one thread flushing the cache while another is running in it with no end in sight. The TC will contain translation units (TU) each of which represents some translated code. Each TU contains its original "source" ARM address, and then just valid Thumb2 code. There will also be a hashtable which will map source "ARM" addresses to a bucket where the first TU for that hash value is stored. Each bucket is a linked list, and 4096 buckets are used. This is configurable. A fast & simple hash is used. Tested on a representative sample of addresses it gave good distribution. Now, whenever we take a UsageFault that indicates an attempted entry to ARM mode, we lookup the desired address in the hashtable. If we get a hit, we simply replace the PC in the exception frame with the "code" pointer of the matching TU and return. The CPU proceeds to execute native code quickly. Wonderful! What if we do not get a hit? We then save the state and replace the PC in the exception frame with the address of the translation code (we do not want to translate in kernel mode).

Parlez-vous ARM?

The front end of a JIT basically just needs to ingest ARM instructions and understand them. We'll trap on any we do not understand, and try to translate all those that we do. Here we hit our first snag. Some games use instructions that are not valid. Bejeweled, I am looking at you! The game "Bejeweled" has some ARM code included in it and it likes to return by executing LDMDB R11, {R0-R12, SP, PC}^. Ignoring the fact that R0-R2 and R12 do not need to be saved and they are being inefficient, that is also not a valid instruction to execute in user mode at all. That little caret at the end means "also transfer SPSR to CPSR". That request is invalid in user mode and ARM architecture reference manual is very clear that executing this in user mode will have undefined effects. This explains why Bejeweled did not run under rePalm under QEMU. QEMU correctly refused to execute this insanity. Well, I dragged out a Palm device out of a drawer and tested to see what actually happens if you execute this. Turns out that it is just ignored. Well, I guess my JIT will do that too. My emulator cores had no trouble with this instr since as this instr is undefined, treating it like it has no caret was safe, and thus they never even checked the bit that indicated it.

Luckily for us, ARM only has a few instruction formats. Unluckily for us they are all pretty complex. Luckily, decoding is easy. Almost every ARM instruction is conditional and the top 4 bits determine if it executes at all or does not. Data Processing operations are always 3-operand. Destination reg, Source reg, and "Operand" which is ARM's addressing mode 1. It can be an immediate of certain forms, a register, a register shifted by an immediate, or a register shifted by a register. Say what?! Yup, you can do things like ADD R0, R1, R2, ROR R3. Be scared. Be very scared! Setting flags is optional. Loading/storing bytes or words uses addressing mode 2, which allows a use of a register plus/minus an immediate, or register plus/minus register, or register plus/minus register shifted by an immediate. All of these modes can be index, postindex, or index-with-writeback, so scary things like LDR R0, [R1], R2, LSL #12 can be concocted. Loading/storing halfwords or signed data uses addressing mode 3, which is just like mode 2 except no register shifts are available. This mode is also used for LDRD and STRD instructions that some ARMv5 cores implement (this is part of the optional DSP extension). Addressing mode 4 is used for LDM and STM instructions, which are terrifying in their complexity and number of corner cases. They can load or store any subset of registers to a given base address with pre-or-post increment-or-decrement and optional writeback. They are used for stack ops. And last, but not least, there are branches which are all encoded simply and decode easily. Phew...

2 Thumbs do not make an ARM

Initially the thought was that the translation cannot be all that hard? The instructions look similar, and it shouldn't be all that bad. Then reality hit. Hard. Thumb2 has a lot of restrictions on operands, like for example SP cannot at all be treated like a general register, and LR and PC cannot ever be loaded together. It also lacks anything equalling addressing mode 1's ability to shift a register by a register as a third operand to an ALU operation. It lacks ability to shift a third register by more than 3, like mode 2 can in ARM. I am not even going to talk about LDM and STM! Oh, and then there is the issue of not letting the translated code know it is being translated. This means that it must still think it is running from original place, and if it reads itself, see ARM instructions. This means that we cannot ever leak PC's real value into any executable state. The practical upshot of that is that we can never emit a BL instruction, and whenever PC is read, we must instead produce an immediate value which is equal to what PC would have been, had the actual ARM code run from its actual place in memory. Not fun...

Thumb2's LDM/STM actually lack half the modes that ARM has (modes ID and DA) so we'd have to expand those instructions to a lot more code. Oh, and Thumb has limits on writeback that do not match ARM's (more strict) and also you can never use SP in the register set, nor can you ever store PC this way in Thumb2. At this point it becomes abundantly clear that this will not be an easy instruction in -> instruction out job. We'll need places to store temporary immediates, we'll need to rewrite lots of instructions, and we'll need to do it all without causing side effects. Oh, and it should be fast too!

A JIT's job is never over

LDM and STM, may they burn in hell forever!

How LDM/STM work in ARM

ARM has two multiple-register ops: LDM and STM. Each has a few addressing modes. First is the order: up or down in addresses (that is, does the base register address where to store the lowest-numbered register or highest. Next is whether the base register itself is to be used, or should it be incremented/decremented first. This gives us the four basic modes: IA("increment after"), IB("increment before"), DA("decrement after"), DB("decrement before"). Besides that, it is optional to writeback the updated base address to the base register. There are of course corner cases, like what value gests stored if base register with writeback is stored, or what value the base register will have if loaded, while writeback is also specified. ARM spec explicitly defines some of these cases as having unpredictable consequences.

For stack, ARM uses a full-descending stack. That means that at any point, the SP register points to the last ALREADY USED stack position. So, to pop a value, you load it from [SP], and then increment SP by 4. This would be done using an LDM instruction with an IA addressing mode. To push a value unto the stack, one should first decrement SP by 4, and then store the desired value into [SP]. This corresponds to an STM instruction with an DB addressing mode. IB and DA modes are not used for stack in normal ARM code.

How LDM/STM work in Thumb2

So why did I tell you all this? Well, while designing the Thumb2 instruction set, ARM decided what to support and what not to. This basically meant that uncommon things did not get carried forward. Yup...you see where this is going. Thumb2 does not support IB and DA modes. At all. Not cool. But there is more. Thumb2 forbids using PC or SP registers in the list of registers to be stored for STM. Thumb2 also forbids ever loading SP using LDM, also if an LDM loads PC, it may not also load LR, and if it loads LR, it may not also load PC. There is more yet... PC is not allowed as the base register, and the register list must be at least two registers long. This is a somewhat-complete list of what Thumb2 is missing compared to ARM.

But wait, there is more. Even the instrutions that map nicely from ARM to Thumb2 and comply with all the restrictions of Thubm2 are not that simple to translate. For example, storing PC, is as always hard - we need a spare register to store the expected PC value so we can push it. But, registers are pushed in order, so depending on what register we pick as our temporary reg, it might be out of other relative to others, we might need to split the store into a few stores. But, there is more yet. What if the store was to SP or included SP? We changed SP by pushing our temp reg, so we need to adjust for that. But what if this was a STMDB SP!(aka: PUSH). Then we cannot pre-push a temp register that easily...

But wait, there's more ... pain

There is another complication. LDM/STM is expected to act as an atomic instruction to userspace. It is either aborted or resumable at system level. But in Thumb2 in Cortex-M chips, SP is special since the exception frame gets stored there. This means that SP must always be valid, and any data stored BELOW SP is not guaranteed to ever persist (since an interrupt may happen anytime). Luckily, on ARM it was also discouraged to store data below SP and this was rarely done. There is one common piece of PalmOS code that does this: the code around SysLinkerStub that is used to lazy-load libraries. For other reasons rePalm replaced this code anyways though. In all other cases the JIT will emit a warning if an attempt is made to load/store below SP.

As you see, this is very very very complex. In fact, the complete code to translate LDM/STM ended up being just over four thousand lines long and the worst-case translation can be 60-ish bytes. Luckily this is only for very weird instructions the likes of which I have never seen in real code. "So," you might ask, "how could this be tested if no code uses it?" I actually used a modified version of my uARM emulator to emulate both orignal code and translated code to verify that each destination address is loaded/stored once exactly and with proper vales only, and then made a test program that would generate a lot of random valid LDM/STM instructions. It was then left to run over a few weeks. All bugs were exterminated with extreme prejudice, and I am now satisfied that it works. So here is how the JIT handles it, in general (look in "emuJit.c" for details).

Translating LDM/STM

- Check if the instruction triggers any undefined behaviour, or is otherwise not defined to act in a particular way as per the ARM Architecture Reference Manual. If so, log an error and bail out.

- Check if it can be emitted as a Thumb2 LDM/STM, that is: does it comply with ALL the restrictions Thumb2 imposes, and if so, and also if PC is not being stored, emit a Thumb2 LDM/STM

- Check if it can be emitted as a LDR/STR/LDRD/STRD while complying with Thumb2 limits on those. If so, that is emitted.

- A few special fast cases to emit translations for common cases that are not covered by the above (for example ADS liked to use STMIB for storing function parameters to stack)

- For unsupported modes IB and DA, if no writeback is used, they can be rewritten in terms of the supported modes.

- If instruction loads SP, it is impossible to emit a valid translation due to ohw ARMv7-M uses SP. For this one special case, the JIT emits a special undefined instruction and we trap it and emulate it. Luckily no common code uses this ever!

- Finally, the generic slow path is taken:

- Generate a list of registers to be loaded/stored, and at what addresses.

- Calculate writeback if needed.

- If needed, allocate a temporary register or two (we need two if storing PC and SP) and spill their contents to stack

- For all registers left to be loaded/stored, see how many we can load/store at once, and do so. This involves emitting a set of instructions: LDR/STR/LDRD/STRD/LDM/STM until all is done.

- If we had allocated temporary registers, restore them

Slightly less hellish instructions

Addressing mode 1 was hard as well. Basically thanks to those rotate-by-register modes, we need a temporary register to calculate that value, so we can then use it. If the destination register is not used, we can use that as temp storage, since it is about to be overwritten anyways by the result, unless it is also one of the other source operands..or SP...or PC... oh god, this is becoming a mess. Now what if PC is also an operand? We need a temporary register to load the "fake" PC value into before we can operate on it. But once again we have no temporary registers. This got messy very quickly. Feel free to look in "emuJit.c" for details. Long story short: we do our best to not spill things to stack but sometimes we do have to.

The same applies to some complex addressing modes. Thumb2 optimized its instructions for common cases, which makes uncommon cases very hard to translate. Here it is even harder to find temporary registers, because if we push anything, we might need to account for that if our base register is SP. Once again: long story, scary story, see "emuJit.c". Basically: common things get translated efficiently, uncommon ones are not. Special case is PC-based loads. These are used to load constant data. In most cases we inline the constant data into the produced translations for speed.

Conditional instructions

Thumb2 does have ways to make conditional instructions: the IT instruction that makes the next 1-4 instructions conditional. I chose not to use it due to the fact that it also changes how flags get set by 2-byte Thumb instructions and I did not want to special case it. Also sometimes 4 instructions are not enough for a translation. Eg: some STMDA instructions expand to 28 instructions or so. I just emit a branch of opposite polarity (condition) over the translation. This works since these branches are also just 2 bytes long for all possible translation lengths.

Jumps & Calls

This is where it gets interesting. Basically there are two type of jumps/calls. Those whose destinations are known at translation time, and those whose are not. Those whose addresses are known at translation time are pretty simple to handle. We look up the destination address in our TC. If it is found, we literally emit a direct jump to that TU. This makes hot loops fast - no exit from translated code is needed. Indirect or computed jumps are not common, so one would think that they are not that important. This is wrong because there is one type of such jump that happens a lot: function return. We do not, at translation time, know where the return is going to go to. So how do we handle it? Well, if the code directly loads PC, everything will work as expected. Either it will be an ARM address and our UsageFault handler will do its thing or it will be a Thumb address and our CPU will jump to it directly. An optimization exists in case an actual BX LR instruction is seen. We then emit a direct jump to a function that looks up LR in the hash - this saves us the time needed to take an exception and return from it (~60 cycles). Obviously more optimizations are possible, and more will be added, but for now, this is how it is. So what do we do for a jump whose destination is known and we haven't yet translated it? We leave ourselves a marker, namely an instruction we know is undefined, and we follow that up with the target address. This way if the jump is ever actually taken (not all are), we'll take the fault, translate, and then replace that undefined instr and the word following it with an actual jump. Next time that jump will be fast, taking no faults.

Translating a TU

The process is easy: translate instructions until we reach one that we decide is terminal. What is terminal? An unconditional branch is terminal. A call is too (conditional or not). Why? Because someone might return from it, and we'd rather have the return code be in a new TU so we can then find it when the return happens. An unconditional write to PC of any sort is terminal as well. There is a bit of cleverness also for jumps to nearby places. As we translate a TU, we keep track of the last few dozen instructions we translated and where their translations ended up. This way if we see a short jump backwards, we can literally inline a jump to that translation right in there, thus creating a wonderfully fast translation of this small loop. But what about short jumps forward? We remember those as well, and if before we reach our terminal instr we translate an address we remembered a past jump to from this same TU, we'll go back and replace that jump with a short one to here.

And if the TC is full?

You might notice that I said we emit jumps between TUs. "Doesn't this mean," you might ask, "that you cannot just delete a single TU?" This is correct. Turns out that keeping track of which TUs are used a lot and which are not is too much work, and the benefits of inter-TU jumps are too big to ignore. So what do we do when the TC is full? We flush it - literally throw it all away. This also helps make sure that old translations that are no longer needed eventually do get tossed. Each thread's TC grows up to a maximum size. Some threads never run a lot of ARM and end up with small TCs. The TC of the main UI thread will basically always grow to the maximum (currently 32KB).

Growing up

After the JIT worked, I rewrote it. The initial version was full of magic values and holes (cases that could happen in legitimate code but would be mistranslated). It also sometimes emitted invalid opcodes that Cortex-M4 would still execute (despite docs saying they were not allowed). The JIT was split into two pieces. The first was the frontend that ingested ARM instructions, maintained the TC, and kept track of various other state. The second was the backend. The backend had a function for each possible ARMv5 addressing mode or instruction format, and given ANY valid ARMv5 instruction, it could produce a sequence of ARMv7M instructions to perform the same task. For common cases the sequence was well optimized, for uncommon ones, it was not. However, the backend handles ANY possible valid ARMv5 request, even insane things like, for example, RSBS PC, SP, PC, ROR SP. No sane person would ever produce this instruction, but the backend will properly translate it. I wrote tests and ran them automatically to verify that all possible inputs are handled, and correctly so. I also optimized the hottest path in the whole system - the emulation of the BLX instruction in thumb. It is now a whopping 50 cycles faster, which noticeably impacted performance. As an extra small optimization, I noticed that oftentimes Thumb code would use a BLX simply to jump to an OsCall (which due to using R12 and R9 cannot be written in Thumb mode). The new BLX handler detects this and skips emulation by calling the requisite OsCall directly.

I then wrote a sub-backend for the EDSP extension (ARMv5E instructions) since some Sony apps use them. The reason for a separate sub-backend is that ARMv7E (Cortex-M4) has instructions we can use to translate EDSP instructions very well, while ARMv7 (Cortex-M3) does not, and requires longer instruction sequences to do the same work. rePalm supports both.

Later, I went back and, despite it being a huge pain, worked out a way to use the IT instruction on Cortex-M3+. This resulted in a huge amount of code refactoring - basically pushing "condition code" to every backend function and expecting it to conditionalize itself however it wishes. This produced a change with an over-4000-line diff but it workes very well and resulted in a noticeable speed icnrease!

The Cortex-M0 backend

Why this is insane

It was quite an endeavor, but I wanted to see if I could make a working Cortex-M0 backend for my JIT. Cortex-M0 executes the ARMv6-m instruction set. This is basically just Thumb-1, with a few minor additions. Why is this scary? In Thumb-1, most instructions only have access to half the registers (r0..r7). Only three instructions have access to high registers: CMP, MOV, and ADD. Almost all Thumb-1 instructions always set flags. There are also no long-multiply instructions in Thumb-1. And, there is no RRX rotation mode at all. The confluence of all these issues makes attempting a one-to-one instruction-to-instruction translation from ARM to Thumb-1 a non-starter.

To make it all work, we'll need some temporary working space: a few registers. It is all doable with three with a lot of work, and comfortable with four. So I decided to use four work registers. We'll also need a register to point to our context (the place where we'll store extra state). And, for speed, we'll want a reg to store the virtual status register. Why do we need one of those? Because almost all of our Thumb-1 instructions clobber flags, whereas the ARM code we're translating expects flags to stick around during long instruction sequences. So our total is: 6. We need 6 registers. They need to be low registers since, as we had discussed, high registers are basically useless in Thumb-1.

The basics

Registers r0 through r3 are temporary work registers for us. The r4 register is where we keep our virtual status register, and r5 points to our context. We use r12 as another temporary. Yes it is a high-reg but sometimes we really just need to store something, so only being able to MOV something in and out of it is enough. So, what's in a context? Well, then state of the virtual r0 through r5 registers, as well as the virtual r12 and the virtual lr register. There, obviously, needs to be a separate context for every thread, since they may each run different ARM code. We allocate one the first time a thread runs ARM (it is actually part of the JIT state, and we copy it if we reallocate the JIT state).

"But," you might say, "if PalmOS's Thumb code expects register values in registers, and our translated ARM code keeps some of them in a weird context structure, how will they work together?" This is actually complex. Before every translation unit, we emit a prologue. It will save the registers from our real registers into the context. At the end of every translation unit, we emit an epilogue that restores registers from the context into the real registers. When we generate jumps between translation units, we jump past these pieces of code, so as long as we are running in the translated code, we take no penalty for saving/restoring contexts. We only need to take that penalty when switching between translated code and real Thumb code. Actually, it turns out that the prologue and epilogue are large enough that emitting then inside every TU is a huge waste of space, so we just keep a copy of each inside a special place in the context, and have each TU just call them as needed. A later speed improvement I added was to have multiple epilogues, based on whether we know that the code is jumping to ARM code, Thumb code, or "not sure which". This allows us to save a few cycles on exiting translated code. Every cycle counts!

Fault dispatching

There is just one more problem: Those BLX instructions in Thumb mode. If you remember, I wrote about how they do not exist in ARMv7-m. They also do not exist in ARMv6-m. So we also need to emulate them. But, unlike ARMv7-m, ARMv6-m has no real fault handling ability. All faults are considered unrecoverable and cause a HardFault to occur. Clearly something had to be done to work around that. This actually led to a rather large side-project, which I published separately: m0FaultDispatch. In short: I found a way to completely and correctly determine the fault cause on the Cortex-M0, and recover as needed from many types of faults, including invalid memory accesses, unaligned memory accesses, and invalid instructions. With this final puzzle piece found, the Cortex-M0 JIT was functional.

Is PACE fast enough?

Those indirect jumps...

Unfortunately, emulation almost always involves a lot of indirect jumps. Basically that is how one does instruction decoding. 68k being a CISC architecture with variable-length instructions means that the decoding stage is complex. PACE's emulator is clearly hand-written in assembly, with some tricks. It is all ARM. It is actualy the same instruction-for-instruction from PalmOS 5.0 to PalmOS 5.4. The surrounding code changed, but the emulator core did not. This is actually good news - means it was good as is. My JIT properly and correctly handles translating PACE, as evidenced by the fact that rePalm works on ARMv7-M. The main problem is that every instruction emulated requires at least one indirect jump (for common instructions), two for medium-comonness ones, and up to three some some rare ones. Due to how my JIT works, each indirect jump that is not a function return requires an exception to be taken (14 cycles in, 12 out), some glue code (~30 cycles), and a hash lookup (~20 cycles). So even in case that the target code has been translated, this adds 70-ish cycles to each indirect jump. This puts a ceiling on the efficiency of the 68k emulator at 1/70th the speed. Not great. PACE usually is about 1/15 the speed of the native code, so that is quite a slowdown. I considered writing better translation just for PACE, but it is quite nontrivial to do fast. Simply put, there isn't a simple fast way to translate something like LDR R0, [R11, R1, LSL #2]; ADD PC, R11, R0. There simply is no way to know where that jump will go, or that even R11 points to a location that is immutable. Sadly that is what PACE's top level dispatch looks like.

A special solution for a special problem

I had already fulfilled my goal of running PalmOS unmodified - PACE does work with my JIT, and the OS is usable and not slow, but I wanted a better solution and decided that PACE is a unique-enough problem to warrant it. The code emulator in PACE has a single entry point, and only calls out to other code in a 10 clear cases: Line1010 (instruction starting with 0xA), Line1111 (instruction starting with 0xF), TRAP0, TRAP8, TRAPF (OsCall), Division By Zero, Illegal instrction, Unimplemented instruction, Trace Bit being set, and hitting a PC value of precisely 0xFFFFFFF0. So what to do? I wrote a tool "patchpace" that will take in a PACE.prc from any PalmOS device, analyze it to find where those handlers are in the binary, and find the main emulator core. It will then replace the core (in place if there is enough space, appended to the binary if not) with code you provide. The handler addresses will be inserted into your code at offsets the header provides, and a jump to your code will be placed where the old emulator core was. The header is very simple (see "patchpace.c") and just includes halfword offsets from the start of the binary to the entry, and to where to insert jumps to each of the abovementioned handlers as BL or BLX instructions). The only param to the emulator is the state. It is structured thusly: first word is free for emulator to use as it pleases, then 8 D-regs, then the 8 A-regs, then PC, and then SR. No further data is allowed (PACE uses data after here). This same state must be passed to all the handlers. TRAPF handler also needs the next word passed to it (OsCall number). Yes, you understand this correctly, this allows you to bring your own 68k emulator to the party. Any 68k emulator will do, it does not need to know anything about PalmOS at all. Pretty sweet!

Any 68k emulator...

So where do we get us a 68k emulator? Well, anywhere? I wrote a simple one in C to test this idea, and it worked well, but really for this sort of thing you want assembly. I took PACE's emulator as a style guide, and did a LOT of work to produce a thumb2 68k emulator. It is much more efficient than PACE ever was. This is included in the "mkrom" folder as "PACE.0003.patch". As stated before, this is entirely optional and not required. But it does improve raw 68k speed by about 8.4x in the typical case.

But, you promised hardware...

Hardware has bugs

I needed a dev board to play with. The STM32F429 discovery board seemed like a good start. It has 8MB of RAM which is enough, 2MB of flash which is good, a display with a touchscreen. Basically it is perfect on paper. Oh, if only I knew how imperfect the reality is. Reading the STM32F429 reference manual it does sound like the perfect chip for this project. And ST does not quite go out of their way to tell you where to find the problems. The errata sheet is damning. Basically if you make the CPU run from external memory, put the stack in external memory, and SDRAM FIFO is on, exceptions will crash the chip (incorrect vector address read). Ok, I can work around that - just turn off the FIFO. Next erratum: Same story but if the FIFO is off, sometimes writes will be ignored and not actually write. Ouchy! Fine! I'll move my stacks to internal RAM. It is quite a rearchitecturing, but OK, fine! Still crashes. No errata about that! What gives? I removed rePalm and created a 20-line repro scenario. This is not in ST's errata sheet, but here is what I found: if PC points to external RAM, and WFI instruction is executed (to wait for interrupts in a low power mode), and then an interrupt happens after more than 60ms, the CPU will take a random interrupt vector instead of the correct one after waking up! Just imagine how long that took to figure out! How many sleepless nights ripping my hair out at random crashes in interrupt handlers that simply could not possibly be executing at that time! I worked around this by not using WFI. Power is obviously wasted this way, but this is ok for development for now, until I design a board with a chip that actually works!

Next issue: RAM adddress. STM32F429 supports two banks of RAM 0 and 1. Bank 0 starts at 0xC0000000 and Bank 1 at 0xD0000000. This is a problem because PalmOS needs both RAM and flash to be below 0x80000000. Well, we're lucky. RAM Bank 0 is remappable to 0x00000000. Sweet.... Until you realize that whoever designed this board hated us! The board only has one RAM chip connected, so logically it is Bank 0. Right? Nope! It is Bank 1, and that one is not remappable. Well, damn! Now we're stuck and this board is unusable to boot PalmOS. The 0x80000000 limit is rather set in stone.

So why the 0x80000000 limit?

PalmOS has two types of memory chunks: movable and nonmovable. This is what an OS without access to an MMU does to avoid too much memory fragmentation. Basically when a movable chunk is not locked, the OS can move it, and one references it using a "handle". One can then lock it to get a pointer, use it, and then unlock when done. So what has this got to do with 0x80000000? PalmOS uses the top bit of a pointer to indicate if it is a handle or an actual pointer. The top bit being set indicates a handle, clear indicates a pointer. So now you see that we cannot really live with RAM and ROM above 0x80000000. But then again, maybe...

Two wrongs do not make a right, but do two nasty hacks?

Given that I've already decided that this board was only for temporary development, why not go further? Handle-vs-pointer disambiguation is only done in a few places. Why not patch them to invert the condition? At least for now. No, not at runtime. I actually disassembled and hand-patched 58 places total. Most were in Boot, where the MemoryManager lives, a few were in UI since the code for text fields likes to find out of a pointer passed to it is a pointer (noneditable) or a handle (editable). There were also a few in PACE since m68k had a SysTrap to detemine the kind of pointer, which PACE implemented internally. Yes, this is not anymore "unmodified PalmOS" but this is only temporary, so I am willing to live with it! But, you might ask, didn't you also say that ROM and RAM both need to be below 0x80000000? If we invert the condition, we need them both above. But flash is at 0x08000000... Oops. Yup, we cannot use flash anymore. I changed the RAM layout again, carving out 2MB at 0xD0600000 to be the fake "ROM" and I copy the flash to it at boot. It works!

Tales of more PalmOS reverse engineering

SD-card Support

Luckily, I had written a slot driver for PalmOS before, so writing an SD card driver was not hard. In fact, I reused some PowerSDHC source code! rePalm supports SD cards now on the STM32F469 dev board. On the STM32F429 board, they are also supported, but since the board lacks a slot, you need to wire them up yourself (CLK -> C12, CMD -> D2, DAT_0 -> C8). Due to how the board is already wired, only one-bit-wide bus will work (DAT_1 and DAT_2 are used for other tthings and cannot be remapped to other pins), so that limits the speed. Also since your wires will be long and floppy, they maximum speed is also limited. This means that on the STM32F429 the speed is about 4Mbit/sec. On the STM32F469 board the speed is a much more respectable 37MBit/sec. Higher speeds could be reached with DMA, but this is good enough for now. While writing the SD card support for the STM32F4 chips, I found a hardware bug, one that was very hard to debug. The summary is this: SD bus allows the host to stop the clock anytime. So the controller has a function to stop it anytime it is not sending commands or sending/receiving data. Good so far. But that data lines can also be used to signal that the card is busy. Specifically, the DAT_0 line is used for that. The problem is that most cards use the clock line as a reference as to when they can change the state of the DAT lines. This means that if you do something that the card can be busy after, like a write, and then shut down the clock, the card will keep the DAT_0 line low forever, since it is waiting for the clock to tick to raise it. "So," you will ask, "why not enable clock auto-stopping except for this one command?" It does not work since clock auto-stopping cannot be easily flipped on and off. Somehow it confuses the module's internal state machine if it is flipped while the clock is running. So, why stop the clock at all? Minor power savings. Definitely not enough to warrant this mess, so I just disabled the auto-stopping function. A week to debug, and a one line fix! The slot driver can be seen in the "slot_driver_stm32" directory.

Serial Port Support

Palm Inc did document how to write a serial port driver for PalmOS 4. There were two types: virtual drivers and serial drivers. The former was for ports that were not hardwired to the external world (like the port connected to the bluetooth chip or the Infra-red port), and the second for ports that were (like the cradle serial port). PalmOS 5 merged the two types into a unified "virtual" type. Sadly this was not documented. It borrowed from both port types in PalmOS 4. I had to reverse engineer the OS for a long time to figure it out. I produced a working idea of how this works on PalmOS 5, and you can see it in "vdrvV5.h" include file. This information is enough to produce a working driver for a serial port, IrDA SIR port, and USB for HotSync purposes.

Actually making the serial port work on the STM32F4 hardwre was a bit hard. The hardware has only a single one-byte buffer. This means that to not lose any received data at high data rates, one needs to use hardware flow control or make the serial port interrupt the highest priority and hope for the best. This was unacceptable for me. I decided to use DMA. This was a fun chance to write my first PalmOS 5 library that can be used by other libraries. I wrote a DMA library for STM32F4-serias chips. The code is in the "dma_driver_stm32" directory. With this, one would think that all would be easy. No. DMA needs to know how many bytes you expect to receive. In case of generic UART data receive, we do not know this. So how do we solve this? With cleverness. DMA can interrupt us when half of a transfer is done, and again when it is all done. DMA can be circular (restart from beginning when done). This gets us almost as far as we need to go. Basically as long as data keeps arriving, we'll keep getting one of these interrupts, and then the other in order. In our interrupt handler, we just need to see how far into the buffer we are, and report the bytes since last time we checked as new data. As long as our buffer is big enough that it does not overflow in the time it takes us to handle these interrupts we're all set, right? Not quite. What if we get just one byte? This is less than half a transfer so we'll never get an interrupt at all, and thus will never report this to the clients. This is unacceptable. How? STM32F4 UART has "IDLE detect" mode. This will interrupt us if after a byte has been RXed, four bit times have expired with no further character starting. This is basically just what we need. If we wire this interrupt to our previous handling code for the circular buffer, we'll always be able to receive data as fast as it comes, no matter the sizes. Cool! The Serial driver I produced does this, and can be seen in the "uart_driver_stm32" directory. I was able to successfully Hotsync over it! IrDA is supported too. It works well. See the photo album for a video demo!

Yes, you can try it!

If you want to try, on the STM32F429 discovery board, the "RX" unpopulated 0.1 inch hole is the STM32's transmit (yes i know, weird label for a transmit pin). B7 is STM32's receive pin. If you connect a USB-to-serial adapter there, you can hotsync over serial. If you instead connect an IrDA SIR transciever there, you'll get working IR. I used MiniSIR2 transciever from Novalog, Inc. It is the same one as most Palm devices use.

Vibrate & LED support

Adding vibration and LED support was never documented, since those are hardware features that vendors handle. Luckily, I had reverse engineered this a long time ago, when I was adding vibration support to T|X. Turns out that I almost got it all right back then. A bit more reverse engineering yielded a complete result of the proper API. LED follows the same API as vibrator: one "GetAttributes" function and one "SetAttributes" function. The settable things are the pattern, speed, delay in betweern repetitions, and number of repetitions. The OS uses them as needed and automatically adds "Vibrate" and "LED" settings to "Sounds and Alerts" preferences panel if it notices the hardware is supported. And rePalm now supports both! The code is in "halVibAndLed.c", feel free to peruse it at your leisure.

Networking support (WIP)

False starts

I really wanted to add support for networking to rePalm. There were a few ways I could think of to do that, such that all existing apps would work. One could simply replace Net.lib with one with a similar interface but controlled by me. I could then wire it up to any interface I wanted to, and all would be magical. This is a poor approach. To start with, while large parts of Net.lib are documented, there are many parts that are not. Having to figure them out would be hard, and proving correctness and staying bug-compatible even more so. Then there is the issue with wanting to run an unmodified PalmOS. Replacing random libraries diminishes the ability to claim that. No, this approach would not work. The next possibility was to make a fake serial interface, and tell PalmOS to connect via it, via SLIP or PPP to a fake remote machine. The other end of this serial port could go to a thread that talks to our actual network interface. This can be made to work. There would be overhead of encoding and decoding PPP/SLIP frames, and the UI would be confusing and all wrong. Also, I'd need to find ways to make the config UI. This is also quite a mess. But at least this mess is achievable. But maybe there is a better approach?

The scary way forward

Conceptually, there is a better approach. PalmOS's Net.lib supports pluggable network interfaces (I call it a NetIF driver). You can see a few on all PalmOS devices: PPP, SLIP, Loopback. Some others also have one for WiFi or Cellular. So all I have to do is produce a NetIF driver. Sounds simple enough, no? Just as you'd expect, the answer is a strong, resounding, and unequivocal "no!" Writing NetIF drivers was never documented. And a network interface is a lot harder than a serial port driver (which was the previous plug-in driver interface of PalmOS that I had reverse engineered). Reverse engineering this would be hard.

Those who study history...

I started with some PalmOS 4.x devices and looked at SLIP/PPP/Loopback NetIF drivers. Why? Like I had mentioned earlier, in 68k, the compiler tends to leave function names around in the binary unless turned off. This is a huge help in reverse engineering. Now, do not let this fool you, function names alone are not that much help. You still need to guess structure formats, parameters, etc. Thus despite the fact that Net.lib and NetIF driver interface both changed between PalmOS 4.x and PalmOS 5.x, figuring out how NetIF drivers worked in PalmOS 4.x would still provide some foundational knowledge. It took a few weeks until I thought I had that knowledge. Then I asked myself: "Was there a PalmOS 4.x device with WiFi?" Hm... There was. Alphasmart Dana Wireless had WiFi. Now that I thought I had a grip on the basics of how these NetIF drivers worked, it was time to look at a more complex one since PPP, SLIP, and Loopback are all very simple. Sadly, Alphasmart's developers knew how to turn off the insertion of function names into the binary. Their WiFi driver was still helpful, but it took weeks of massaging to make sense of it. It is approximately at this point that I realized that Net.lib had many versions and I had to look at others. I ended up disassembling each version of Net.lib that existed to see the evolution of the NetIF driver interface and Net.lib itself. Thus I looked at Palm V's version, Palm Vx's, Palm m505's, and Dana's. The most interesting changes were with v9, where support for ARP & DHCP was merged into Net.lib, whereas previously each NetIF driver that needed those, embedded their own logic for them.

On to OS 5's Net.lib

This was all nice and great, but I was not really in this to understand how NetIF drivers worked in PalmOS 4.x. Time had come to move on to reverse-engineering how PalmOS 5.x did it. I grabbed a copy of Net.lib from the T|T3, and started tracing out its functions, matching them up to their PalmOS 4.x equivalents. It took a few more weeks, but I more or less understood how PalmOS 5.x Net.lib worked.

I found a bug!

Along the way I found an actual bug: a use-after-free in arp_close()

Well, that was easy...

Then I started disassembling PalmOS 5.x SLIP/PPP/Loopback NetIF drivers to see how they had changed from PalmOS 4.x. I assumed that nobody really changed their logic, so any changes I see could be hints on changed in the Net.lib and NetIF structure between PalmOS 4.x and PalmOS 5.x. It turned out that not that much had changed. Structures got realigned, a few attribute values got changed, but otherwise it was pretty close. It is at this point that I congratulated myself, and decided to start writing my own NetIF driver to test my understanding.

NOT!

The self-congratulating did not last long. It turned out that in my notes I marked a few things I had thought inconsequential as "to do: look into this later". Well, it appears that they were not inconsequential. For example: the callback from DHCP to the NetIF driver to notify it of DHCP status was NOT purely informative as I had thought, and in fact a large amount of logic has to exist inside it. That logic, in turn, touches the insides of the DhcpState structure, half of which I had not fully understood since I thought it was opaque to the NetIF driver. Damn, well, back to IDA and more reverse engineering. At some point in time here, to understand what various callbacks between Net.lib and the NetIF driver did, I realized that I need to understand DHCP and ARP a lot better than I did. After sinking some hours into reading the DHCP and ARP RFCs, I dove back into the disassembled code. It all sort of made sense. I'll summarize the rest of the story: it took another three weeks to document every structure and function that ARP and DHCP code uses.

More reverse engineering

There was just one more thing left. As the NetIF driver comes up, it is expected to show UI and call back into Net.lib at various times. Different NetIF drivers I disassembled did this in very different ways, so I was not clear as to what was the proper way to do this. At this point I went to my archive of all the PalmOS ROMs, and wrote a tool to find all the files with the type neti(NetIF drivers have this type), skip all that are PPP, SLIP, or Loopback, and copy the rest to a folder, after deduplicating them. I then disassembled them all, producing diagrams and notes about how each brought itself up and down, where UI was shown or hidden, and when each step was taken. While doing this, I saw some (but not much) logging in some of these drivers, so I was able to rename my own names for various values and structs to more proper ones that writers of those NetIF drivers were kind enough to leak in their log statements. I ended up disassembling: Sony's "CFEtherDriver" from the UX50, Hagiwara's WiFi memorystick driver "HNTMSW_neti", Janam's "WLAN NetIF" from the XP30, Sony's "CFEtherDriver" from the TH55, PalmOne's "PxaWiFi" from Tungsten C, PalmOne's "WiFiLib" from the TX, and PalmOne's "WiFiLib" from their WiFi SD card. Phew, that was a lot! Long story short: the reverse engineered NetIF interface is documented in "netIfaceV5.h" and it is enough that I think a working NetIF driver can be written using it.

"You think?" you might ask, "have you not tested it?". Nope, I am still writing my NetIF driver so stay tuned...

1.5 density support

Density basics

PalmOS since version 4.2 has support for multiple screen densities. That is to say that one could have a device with a screen of the same size, but more pixels in it and still see things rendered at the same size, just with more detail. Sony did have high-res screens before Palm, and HandEra did before both of them, but Palm's solution was the first OS-scale one, so that is the one that PalmOS 5 used. The idea is simple. Each Bitmap/Window/Font/etc has a coordinate system associated with it, and all operations use that to decide how to scale things. 160x160 screens were termed 72ppi (no relation to actual points or inches), and the new 320x320 ones were 144ppi (double density). This made life easy - when the proper density image/font/etc was missing, one could pixel-double the low-res one. The reverse worked to. Pen coordinates also had to be adjusted of course since now the developer could request to work in a particular coordinate system, and the whole system API then had to.